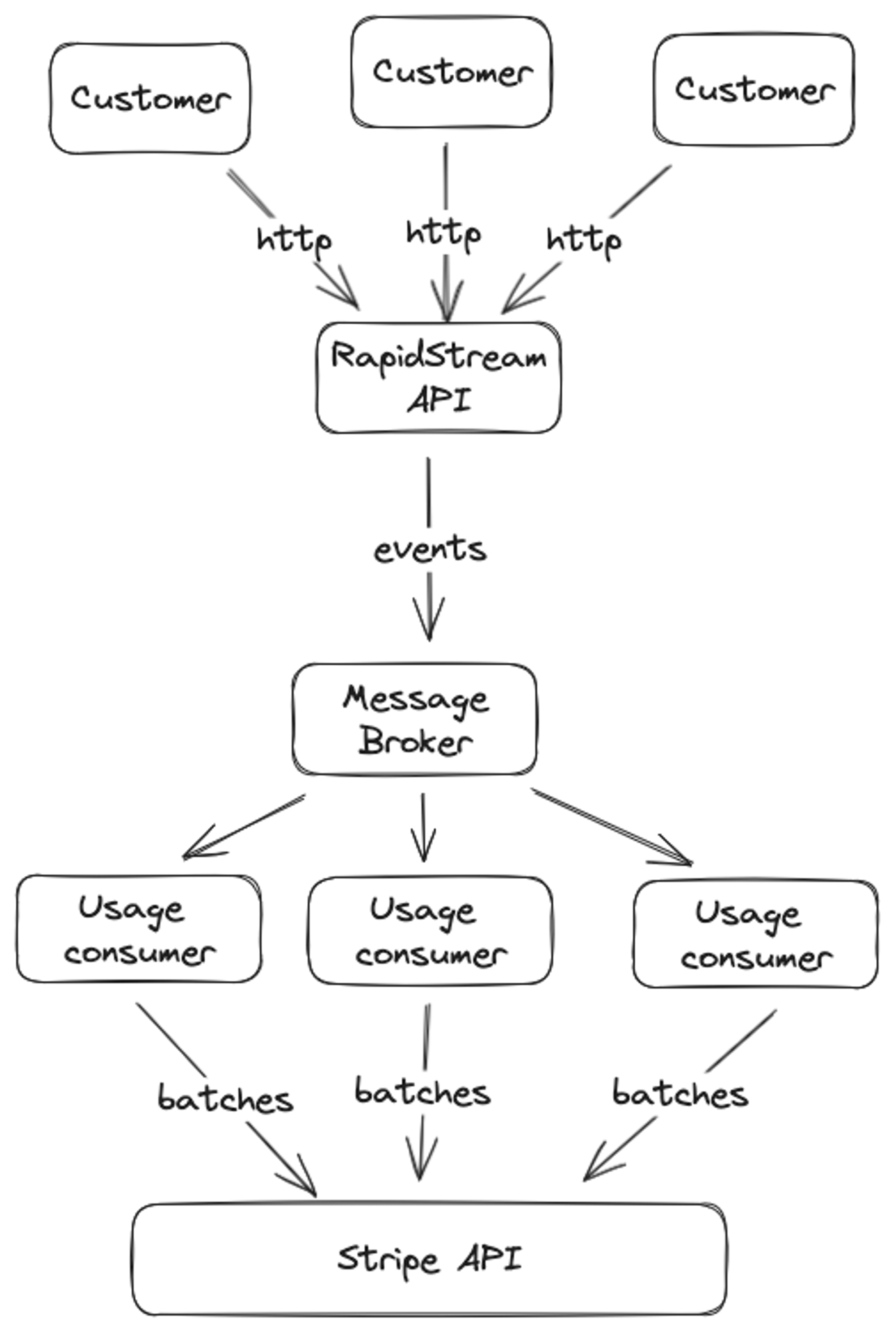

In this post, we'll share how we worked around Stripe's rate limit by leveraging a message broker and VPC setup.

Our Approach: Message Broker + VPC Setup

To overcome the limitations imposed by Stripe’s rate limit, we chose to write usage events within our HTTP request into a message broker - specifically, LavinMQ hosted by CloudAMQP. This allowed us to decouple our services and handle high volumes of requests efficiently.

By setting up a VPC (Virtual Private Cloud) environment, we achieved very high rates of consume and publish operations - up to 10k requests per second. Bandwidth throughput was our primary concern, as we needed to ensure that our system could handle the massive volume of data being transmitted.

Benefits of VPC Setup

The VPC setup offered us several benefits:

- Faster communication: We can bypass the need for public internet and use private IP addresses, which reduces latency and improves overall performance.

- Increased security: Data is transmitted within a secure environment, reducing the risk of unauthorized access or eavesdropping.

How We Handle Stripe Writes

To handle Stripe writes efficiently, we implemented a mechanism to push numbers to Stripe in batches. This approach ensures that our system can write data to Stripe without exceeding the rate limit imposed by their API.

We’ve also implemented a timeout mechanism to ensure that if the load is not high, numbers are pushed to Stripe at regular intervals (e.g., every 5 minutes). This prevents our system from overwhelming Stripe’s API and allows us to maintain a consistent flow of data.

Scaling Our Services in Kubernetes

Our services run on a kubernetes cluster, which enables us to scale our consuming services as needed. However, this also means that we need to ensure that when a pod shuts down unexpectedly, the numbers get pushed to Stripe without losing any data.

To achieve this, we’ve implemented graceful shutdowns for our pods.

Conclusion

Working around Stripe’s rate limit required us to think creatively and explore innovative solutions. By leveraging a message broker and VPC setup, we were able to overcome the limitations imposed by their API. This again show, how important scalability and reliability in building high-traffic applications is.